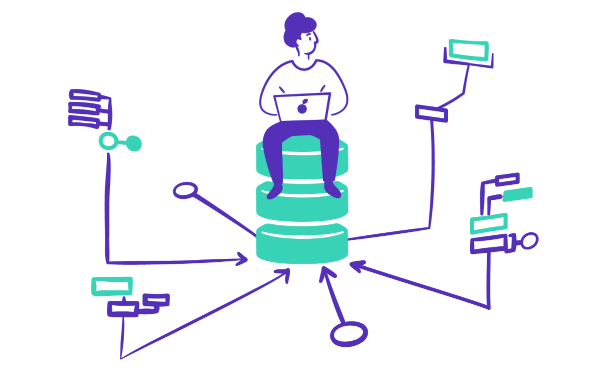

Managing data can be difficult for even the most experienced professionals. However, by working with a data warehouse, you can more effectively track data and drive smart decision-making. A data warehouse is used for storing and reporting on data. The data typically originates in multiple systems and is then moved to the warehouse for long-term storage and analysis. Automated data warehouse systems, by extension, can automatically store, process, and analyze large amounts of data. They are often used by businesses to support data-driven strategy development, measure against KPIs, and surface data to customers. Some refer to this system as automation as a service.

One of the key benefits of an automated data warehouse is its ability to handle large volumes of data efficiently and accurately. These systems are designed to scale and can handle billions of rows of data without experiencing performance degradation. Data automation tools can also help users manage data from a variety of sources, including transactional databases, log files, social media platforms, and ad platforms.

Another advantage of automated data warehousing — and automation in data engineering in general — is that it can enable advanced analytics on data. Data warehouses can be used to transform data, and they integrate with business intelligence (BI) tools, allowing even less technical users to visualize and analyze data. They can also be paired with machine learning capabilities, helping reveal insights and trends that might not be immediately apparent to humans or would require a burdensome amount of time to uncover. For example, an automated data warehouse might be used to identify patterns in customer behavior or to predict future demand for products.

Mozart Data helps users centralize and store data in warehouses so that they can access it in one place rather than having to switch between disparate platforms to find what they need. Mozart offers a comprehensive view of data and allows users to speed up analysis. You can also decrease time to insight by over 70% while creating a universal source of truth for all members of the team to draw from. This will ultimately allow you to save time and money so that you can focus more of your attention on other business matters.

Data visualization and reporting: This component enables users to understand and communicate insights and trends that have been discovered through data analysis.

An automated data pipeline is a system designed to move data from one location to another when you need that data moved. These pipelines are often capable of handling large volumes of data and can be used to integrate data from a wide range of sources. They play a critical role in data observability and can enable you to better understand your data flow and data lineage.

Automated data pipelines streamline the process of moving and integrating data. They can be configured to run automatically, eliminating the need for manual intervention and reducing the risk of errors. This can help to ensure that data is readily available, which is crucial when making important business decisions.

Additionally, these pipelines can handle complex data transformations and support a number of tasks, including filtering, aggregation, and data cleansing, in order to prepare data for analysis or reporting. Needless to say, this can help ease the burden on organizations, as manual data preparation can be a time-consuming and error-prone process. It can allow you easier access to your data catalog, and you can quickly identify any issues in the system.

Modern data pipeline architecture consists of several elements, each of which is designed to help data move through the pipeline at a steady pace. Key elements include:

Data sources: These are the systems or processes that generate data that needs to be collected and processed, such as ad platforms and payments providers.

Data extraction: Using a method like Extract-Transform-Load (ETL) or Extract-Load (EL), data extraction is the process of collecting and moving the data you need from your various sources.

Data storage: This is the component that stores the data collected from various sources. Examples include data warehouses, databases, file systems, and data lakes.

Data processing: Data processing deals with the transformation, cleaning, and preparation of data for further analysis or consumption.

Data modeling: The data modeling component of a modern data pipeline architecture or framework defines the structure and relationships of the data, usually in the form of a data model or schema.

When connected together correctly, these elements make up an automated data pipeline and enable businesses to gain a deeper understanding of their data and, ultimately, boost revenue and improve profitability.

Data warehouse automation tools help organizations automate the process of using, maintaining, and optimizing data warehouses. Automation allows organizations to focus on more strategic tasks, such as data analysis and business decision-making. Some of the key features of data and application automation tools include the ability to extract data from a variety of sources, transform and cleanse the data, and load it into a data warehouse.

What’s more, these tools often include features for enabling data warehouse integration, scheduling and executing data pipelines, monitoring the pipeline for errors or issues, and managing the data lifecycle. Data warehouse automation tools can be particularly useful for organizations that have large amounts of data or that need to frequently update their data warehouses. They can also be useful for companies that have limited resources or expertise in data warehousing, as they can significantly reduce the learning curve and make it easier to get started with data warehousing and data warehouse automation.

Automated ETL can be extremely useful for organizations responsible for large amounts of data. There are a number of automated ETL tools available on the market, each with its own set of features and capabilities. ETL automation tools are typically able to:

Extract data from numerous sources.

Transform and cleanse data. Some teams will transform data further in the data warehouse, but with automated ETL it is much easier to quickly utilize data.

Load data into a target system, such as a data warehouse or data lake.

Schedule and execute data pipelines on a regular or real-time basis.

Monitor the pipeline for errors or issues and alert admins if necessary.

Manage the data lifecycle, including tasks such as archiving and purging data as needed.

With ETL automation testing tools, you can do more with the data that’s available to you. Instead of having to manually schedule every process, you can set these processes up once and trust that your data will be synced when you need it, saving you time.

Before getting started with these tools, it’s a good idea to have an ETL automation framework in place. This type of framework includes tools, processes, and best practices that are designed around ETL. These frameworks are generally used in conjunction with automated ETL tools for the purpose of providing a structured approach to building and maintaining data pipelines.

ETL automation frameworks can help organizations improve the efficiency and effectiveness of their data pipelines by providing a set of standardized components and patterns that can be used to build and maintain data pipelines. Some common features of ETL automation frameworks include the following:

Best practices: Best practices encompass guidelines and recommendations for building and maintaining data pipelines. For instance, they might issue guidance on technical topics like data modeling and governance, but also more common sense decisions like how often different data sources should be synced or which data should be moved to a data warehouse.

Tools and utilities: Tools and utilities associated with ETL, such as scheduling and monitoring tools, can be used to build and maintain data pipelines. A tool like Mozart Data’s modern data platform includes many of the tools companies commonly use to maintain their pipeline integrity.

Becoming familiar with some of the key tools and components involved in the ETL process is crucial for anyone working with data. ETL can help speed things up, offering insight into aspects of your data that you might have otherwise overlooked. You might also browse ETL automation ideas online to get a better sense of how this process works.

Data transformation refers to the process of converting data from one format or structure to another or changing its accessibility in some way in order to facilitate its use or analysis. While it sounds complicated, sometimes data transformation is as simple as removing duplicate values from a table or combining two relevant data sources in one table to enable easier, more impactful analysis.

This process is often a key step in preparing data for deeper analysis and helps companies make the most of the other data tools they’re using. For example, if a company is working with a data warehouse or data lake, they likely want to use data transformation to make the most of different types of data. Unstructured data is increasingly valuable to companies and with data transformation tools, it can be stored as-is and manipulated later.

Automated data transformation goes hand-in-hand with this process and enables businesses to gain relevant insights into their data. With a data stack like Mozart Data, customers can easily automate these critical data transformations in their Snowflake data warehouse, resulting in an automated data pipeline that helps all users at an organization operate in a data-driven manner.

Resources