Best ETL Tools for Snowflake

Save time and resources loading data into your warehouse

Snowflake data warehouses are a leading solution for data storage because of how they rapidly scale to meet the demands of big data.

Snowflake accomplishes this by decoupling storage and processing, enabling searches and data transforms to use more resources during peaks or reduce costs when not in operation.

Built with flexibility for scale, Snowflake is a leading challenger to big data systems that use Hadoop.

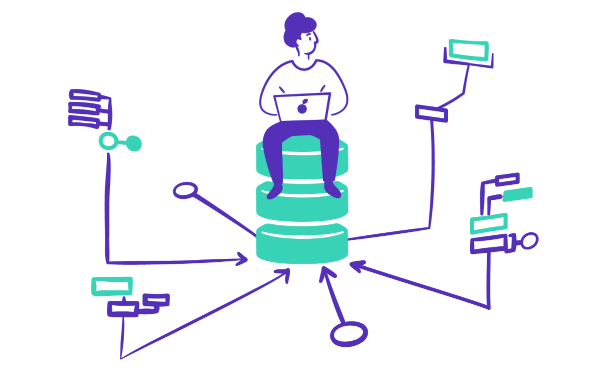

Centralizing data in a Snowflake data warehouse makes it easier to process, but Snowflake alone doesn’t solve all your data problems. You need to get data into Snowflake and the ideal solution for that is an ETL tool.

To get the most out of Snowflake, seek out ETL tools for big data that can handle the scale of Snowflake and play to its strengths.

When looking for the best ETL tools for Snowflake, you’ll find the most success with a dedicated Snowflake ETL partner that is purpose-built to work with Snowflake. Contact us to set up a demo and see how Mozart Data ETL works with Snowflake to ensure the greatest accuracy, security, and reliability.

Before looking at Snowflake-specific ETL solutions, let’s take a minute to step back and define what ETL means.

ETL stands for extract, transform, and load. ETL tools connect data from sources throughout an organization to make it easier for team members to access it, analyze it, and make data-driven decisions.

Within any organization, centralizing data is a challenge because applications and devices are not all built to share data in the same way. ETL brings standardization and automation to data centralization, ensuring data is properly delivered to a storage location like a data warehouse.

To get a better understanding of ETL, let’s look at some technical ETL FAQs.

What is ETL in Big Data?

The more data your organization generates, the more likely you will need a dedicated ETL tool to manage it. ETL can make managing big data easier, breaking up data shipments and automatically scheduling them to manage the load on downtimes.

What is ETL in Data Warehouse?

ETL tools are the most reliable and secure way to move data to scalable cloud storage locations known as data warehouses. Snowflake is an example of a leading data warehouse.

What is ETL in SQL?

The main way through which users create transformations in the ETL processes is through SQL-based transformations. SQL stands for the structured query language. It can also be used by an ETL developer to search data and create data connector schemas.

An example of an SQL query, here’s a search for the ten most recent transactions from the financial transaction tool Stripe:

select

date_format(created, ‘%m-%d-%Y’) as day,

id,

amount,

currency,

source_id,

type

from balance_transactions

order by day desc

limit 10

What is ETL testing?

Since ETL moves high volumes of data at high speeds, it’s possible that errors occur in the data transferring. ETL testing is the process of looking at all records that were supposed to be transferred vs. what was actually transferred to identify errors in the process.

What is Modern ETL vs. Traditional ETL?

While ETL is not a new technology for moving data, it has recently undergone a transformation as it has adapted to cloud computing. The transformation marks a difference between traditional ETL and modern ETL.

Uses on-premises resources to ship data

Infrastructure is managed by an internal team of data scientists

The only supported data sources are relational databases (RDBMS)

Uses cloud storage to ship data

Management of infrastructure can be automated, bundled with cloud services

Supports a wide variety of data sources including databases, applications, devices, and semi-structured data

To implement Snowflake, most organizations will use a modern ETL solution because it will work as cloud native tools and be more flexible than traditional options.

ETL tools for Snowflake can also go beyond the simple ETL paradigm to include ELT purposes. In ETL scenarios, data is processed before it is loaded into warehouses. In ELT, the order is switched to make it so users conduct transformations after data is loaded into data warehouses. This difference with ELT allows users to leverage Snowflakes processing resources to conduct more advanced transforms.

Looking at ETL tools for Snowflake, the ones that come out on top have the most connectors and work for both Snowflake ETL and ELT scenarios. All of the tools listed here are cloud ETL tools that work well with both ETL and ELT.

Provides ETL/ELT services for close to 300 pre-built connectors

Has 99.99 percent uptime

Shares live uptime information about all connectors they support on their status page

Easy to set up and operate with no coding required.

Fivetran makes an excellent modern ETL solution for Snowflake. It support one of the widest ranges of connectors and is one of the most reliable options.

Provides ETL/ELT services for over 130 connectors\

Many connectors are provided through Stitch’s Singer open-source program

Similar to Fivetran in price and ease of operation\

While the open source program can lead to more flexibility, it also reduces support opportunities if something goes wrong with a connector. Users often end up building their own connectors using open source tools or augmenting their connectors with tools like Portable.

Supports 300+ pre-built connectors for ETL/ELT

Can build customer long tail connectors with a short lead time

Can be implemented with no code

Everyone’s data system has unique needs and data sources. Sometimes you need custom connectors for your data warehouse. Portable can help build them. Typically Portable is not used on its own, but in combination with a core ETL tool that connects major databases as well.

Provides ETL/ELT services for over 130 connectors

Like Fivetran and Stitch, it supports automated ETL with a no-code interface

Supports both ETL and reverse ETL

The key distinguishing attribute of Hevo is that it supports ETL and reverse ETL, enabling you to easily ship data back to their sources after transforms.

It’s possible that the best solution is to bundle two ETL services together to make sure all connector needs are covered. At Mozart we use Fivetran and Portable together. Fivetran provides the reliable core connectors and Portable provides vital customizations.

Choosing the right ETL tool involves these key considerations:

What connectors do you need?

Do you want a licensed tool or an open source?

How much data do you need to transfer?

What is your budget?

How much support do you need to conduct set-up and maintenance?

The most important question to answer is: which ETL tools have the data connectors for the sources you need to connect? Picking a solution with the maximum number of prebuilt connectors typically will provide the main connectors you need. If you need more connectors, supplementing with an ETL solution like Portable which specializes in custom connectors built-to-order can ensure you have absolutely everything connected.

Another early decision to make is choosing between open source ETL tools and licensed ETL tools.

While open source options have no up-front costs, the time and human capital costs of managing an open source tool can be immense. Choose the best ETL tools that are appropriate for your team and the amount of time it can dedicate to data management. If you have a complex system with many data sources that all require maintenance to their connections, it’s best to use a tool that comes with a license and provides support.

For data flows that require high reliability, licensed tools are preferable as well because if there is a failure, with a licensed tool you will have expert support help. With an open source tool you will be trying to fix it all by yourself.

One more reason to choose a licensed tool over an open source tool is that modern licensed ETL tools like ones we’ve listed (Hevo, Stitch, Fivetran, and Portable) make set up easy. If you’re using an open source solution, expect all processes to take significant input from your developers and you may need to hire additional specialists to design your data pipeline.

Other considerations include ability to automate data loads, conduct streaming data, and size of workloads. If you’re planning on joining together big data, look for an ETL solution built for scale like Fivetran. Fivetran supports streaming real-time data for big data workloads while minimally impacting the resources of the data source.

Keep in mind that the best solution may be actually choosing two solutions. If you choose two tools, you can balance your workloads between them and reduce cost.

While Snowflake has virtually unlimited compute and storage resources, the people who use it don’t have unlimited funding to pay for unlimited resources! See how to get the most you can out of Snowflake by implementing our Snowflake ETL best practices.

Our suggestions can help you minimize operating costs, cut down and management time, and have more reliable data warehouse storage.

Snowflake ETL Best Practices

Have a separate dedicated virtual warehouse for each process – By separating incoming ETL from ELT and other data warehouse activities, you comply with Snowflake data architecture best practices. This makes it easy to manage your data and also it’s a good idea to track the resources used by using a resource monitor. You can impose credit quotas based on day, week, month, year or total.

Set timeouts – For ETL and ELT, setting timeouts for when your Snowflake ETL tools complete loading can save resources and operating costs.

Know when to use ETL paradigm vs ELT – By using an ETL paradigm, you can save data warehouse resources if you don’t need all raw data from your data sources. The advantage to using ELT is that it is more adaptable to changing transform needs. The best ETL tools for Snowflake can accommodate either.

Keep transforms simple – It’s easier to maintain SQL queries that are shorter so break up long complex queries into a series of steps if possible. While easier to maintain, it also can make querying more time-efficient.

Add Snowflake Code to your CI/CD pipeline – Don’t forget to treat code in Snowflake like any other code. Integrate it and test it to prevent code degradation and failures. You’ll want to include SQL code that manages transforms, scripts, and stored procedures.

The most important thing to look for in your ETL tool is the ability to connect the data sources you need. Find out how Mozart works with Fivetran and Portable to leverage the best ETL tools for Snowflake. Contact us to see a demo.

Resources