Understanding Data Pipeline Tools

Coordinating the movement of your data to a central location

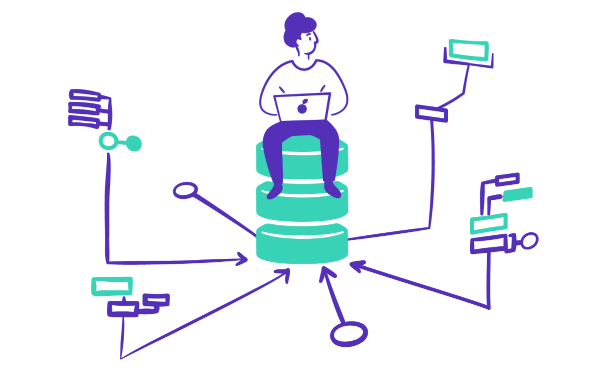

A “data pipeline tool” is a term that isn’t consistently defined across the data world. A solution that extracts data from disparate sources and moves that data to another location for analysis and storage is a fairly widely accepted definition of a data pipeline tool, but it might not cover everything. Some data experts might add other features that make these pipelines more useful — the user interfaces that make pipelines easy to view, scheduling features, and the tools used to transform data — to their definition. Others would say that moving data without coordination does not constitute a true pipeline, and thus a data pipeline tool must have the means to move data with contingent steps that aid in organization.

To find the best data orchestration tools for your business, it can be helpful to consider the challenges you’re currently facing with your data pipeline. For example, you might be having trouble getting your data to the right place. You might also be struggling to visualize that data once it has arrived at the intended destination. There are many issues you may face when working with a data pipeline, and the right tools can help mitigate these difficulties.

It’s also important to understand data pipeline architecture and how it plays a critical role in successfully implementing pipeline tools. In short, a data pipeline architecture is a framework or system that outlines how data should be captured, processed, and stored for the best results. Following data pipeline architecture best practices can help promote a smooth, efficient process. Best practices include documenting your ingestion sources, prioritizing scalability, and continually testing data to ensure quality. Adhering to these tips is the best way to create a modern data pipeline architecture. They can serve as a helpful guide for how to develop a solid framework and what to avoid when working with data pipelines.

Once you have your tools in architecture in place, you can get started with data pipeline orchestration, which is the process of coordinating and automating the pipeline workflow. Today, there are many different kinds of data pipeline technologies, so it’s important to do your research and thoroughly consider all of your options before implementing a new solution of any kind. While finding the right pipeline tools can be difficult, it is critical to the success of your data management strategy. Tools with robust automation capabilities are especially helpful, as they allow users to get through their workflows with minimal effort. Learning to automate your pipeline processes is one of the best ways to increase the efficiency of your workflows and work towards creating a more effective strategy for data management.

Mozart Data provides a modern data platform for users to centralize and analyze their data. By transforming your data this way, you can obtain more actionable insights that can be used to drive better decision-making. With Mozart Data, you can decrease time to insight by over 70% and create a universal source of truth that keeps everybody on the same page. Additionally, you can save time and money by automating transforms. Other data management tools are challenging to set up and use, but Mozart Data makes it easy for businesses to get started with the tools they need to transform their data and start using that data more efficiently.

Data pipeline frameworks are systems that define how data should move through pipelines and what should be done with it when it arrives at its destination. When developing a data pipeline framework for your own business, you should think about your specific needs and your ultimate goal for your data. Each business is unique, and it’s important to keep this in mind when creating a framework for your organization. There are many different ways to structure and organize your data, although following some standard best practices can be extremely helpful, regardless of your specific needs. This can help ensure you hit on all key points and are well-positioned to move forward with whatever tools and framework you decide to use.

The best data pipeline tools are those that allow users to customize their workflows in whatever ways necessary to achieve greater efficiency with their processes. Not all tools are designed equally, so it may take some experimentation and trial and error to find the solutions that are best suited to meet the needs of your individual company. Some tools are designed to handle the tasks of large organizations, while others are built to be simple and easy to use so those smaller companies can effectively manage their workflows. Finding the right tools for your business is crucial to improving tasks and being more efficient with your time. Though finding these tools can be tricky, it can be worth it to improve your data pipeline.

ETL data pipeline tools help extract, transform, and load data and are a common choice. In this way, they are helpful for multiple processes and can be used throughout the data pipeline. Learning to use these tools — especially alongside data analysis tools — is the first step in automating your workflow. Instead of having to manage each task by hand, which can eat up your time, you can perform tasks automatically using advanced solutions that are specifically designed to simplify data management.

Open-source ETL tools can be modified and distributed for public use. They are an option for businesses that are unable or unwilling to build tools completely from scratch but want the flexibility to make changes to the source code as necessary.

There are many different types of open-source tools for data management. Open-source orchestration tools, for instance, are an option for businesses that want to tailor orchestration tools to their specific processes. There are many different ways that you can set up your pipeline and framework, so working with a flexible solution can be helpful when customizing these processes.

Open-source pipeline framework tools are also helpful for creating a good data pipeline, as are ETL data pipeline tools. As discussed previously, ETL tools are built to extract, transform, and load data and are great for managing pipelines on a holistic level. With open-source ETL data pipeline tools, you can exercise greater control over how those tools are used to manage your workflow.

However, many of the leading ETL and data pipeline tools are not open-source. Before choosing an open-source ETL tool, you should consider the gaps in your workflow and what you are ultimately aiming to accomplish. Do you need a specific capability that other tools cannot provide? Do you have the personnel resources (both skills and time) to take advantage of open-source tools? Do the tools have a disadvantage that offsets their added capabilities, like cost or difficulty of use?

You should also think about integration capabilities and whether you plan on integrating your new tools with your old solutions. When used together, many tools provide a much-needed boost to users’ workflow efficiency. Whether you decide to go with open-source tools or otherwise, you should check to see whether or not they are compatible with the tools you are already using.

Data observability is a multi-faceted process with many moving parts. Getting data through the pipeline and where it’s meant to be is just one aspect of data management, which is why it’s important to think about pipelines from a high level. There is a wide range of data pipeline management tools to help users monitor their pipelines more effectively. For example, with a data pipeline monitoring dashboard, you can get relevant, real-time insights into your process and see exactly what’s happening with your data at any given time. This can make it easier to resolve whatever issues arise and take note of the things that are going well.

Data lineage tools are a very important part of data observability. These tools can help users visualize their data pipelines, so they can see where data is coming from, where data is going, how different data sets are interacting with each other, and how data is being manipulated along the way. With a robust tool, you can view all dependencies and be alerted to any failures, instead of trying to monitor everything manually. This can speed up your workflow and allow for greater consistency across your processes. It can help keep the rest of your team aligned on common goals. Data pipeline monitoring tools play a critical role in data management and are a must-have for any business that is struggling to keep up with workflow demands.

The modern approach to data pipeline observability combines transparency with efficiency, automating manual tasks to save users time and prevent human errors in the system. Mozart Data’s data observability tools do just that, allowing users complete visibility into their pipelines so that they can identify and fix problems with ease. You can track changes and stay up to date with all that is happening in your pipeline. Tools like these are incredibly valuable and can be especially helpful for those that manage complex data pipelines. Trying to stay on top of everything at all times can be difficult and exhausting, but data pipeline tools help simplify the way businesses manage their data. This helps to create greater quality and consistency across their data.

Resources